Behind the Scenes of ‘KIHA’- with iClone and Unreal Engine

Dom Fred

Dom began his career in the television advertising industry, directing commercials using new production’s digital and 3D tools. Over the past 20 years, he has also worked on music videos and documentaries, catering to a global clientele.

Since his childhood, Dom has been a martial artist, mastering various disciplines such as Taekwondo, Aikido, and Kung-Fu. His exceptional skills in Taekwondo, particularly at the Olympic and spectacular levels, have highlighted his talent for stunt work and acrobatics from a young age.

In 2007, Dom established his own company, DOM ANIMATION STUDIO, specializing in product and post-production. With a unique touch, he incorporates 3D characters into real environments, integrates special effects (SFX), and creates martial arts and acrobatics action sequences for commercials.

In 2010, Dom directed a short action film utilizing camera tracking, motion capture, and 3D compositing techniques. Two years later, he produced and directed his first independent short films, which received several awards in Los Angeles, Texas, Las Vegas, and New York for Best Action Sequences, Best Action Director, and Best Short Film.

Introduction: Unveiling the Vision

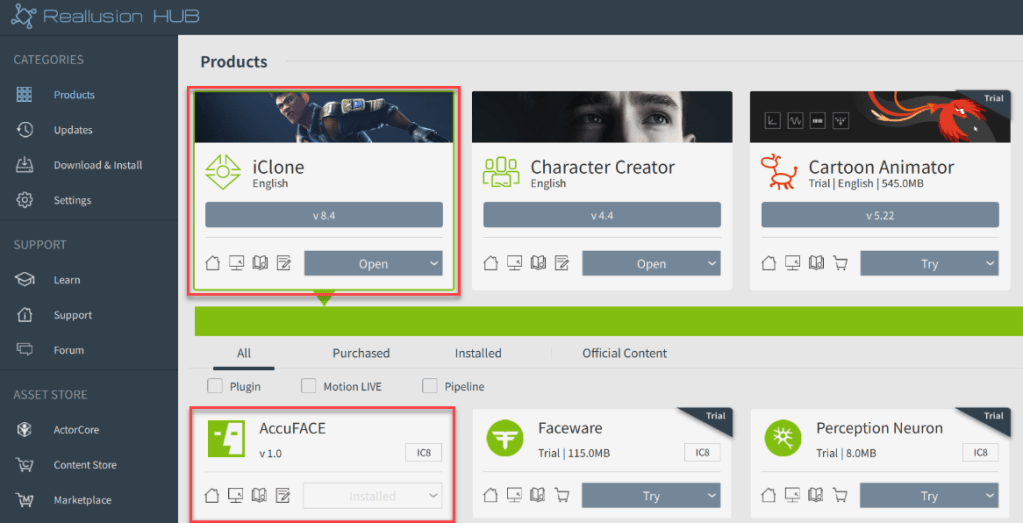

“KIHA,” a captivating science fiction short film, stands as a testament to the boundless creativity and technical prowess of director and Maya 3D animator Dom Fred. In this quick behind the scenes, the magic unfolds through the seamless integration of iClone, where every detail comes to life with precision and finesse.

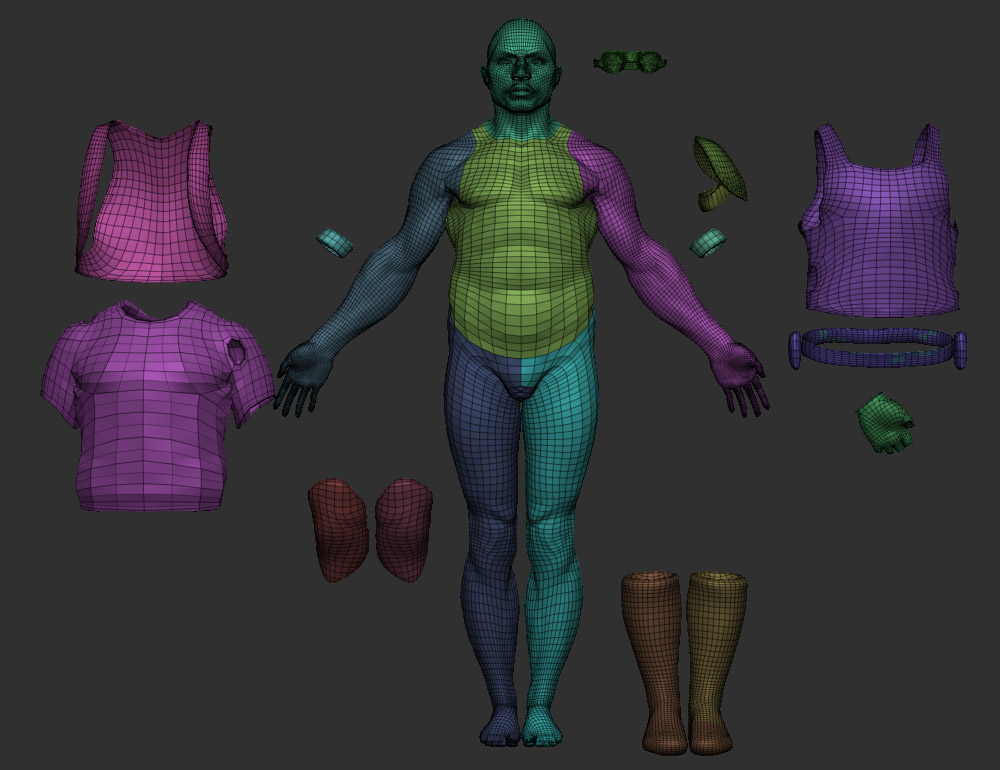

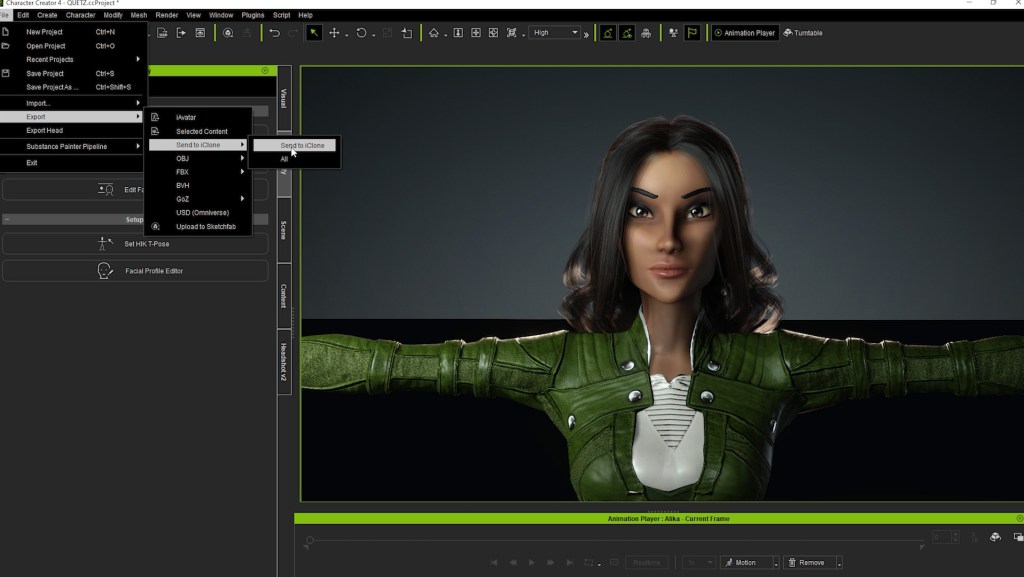

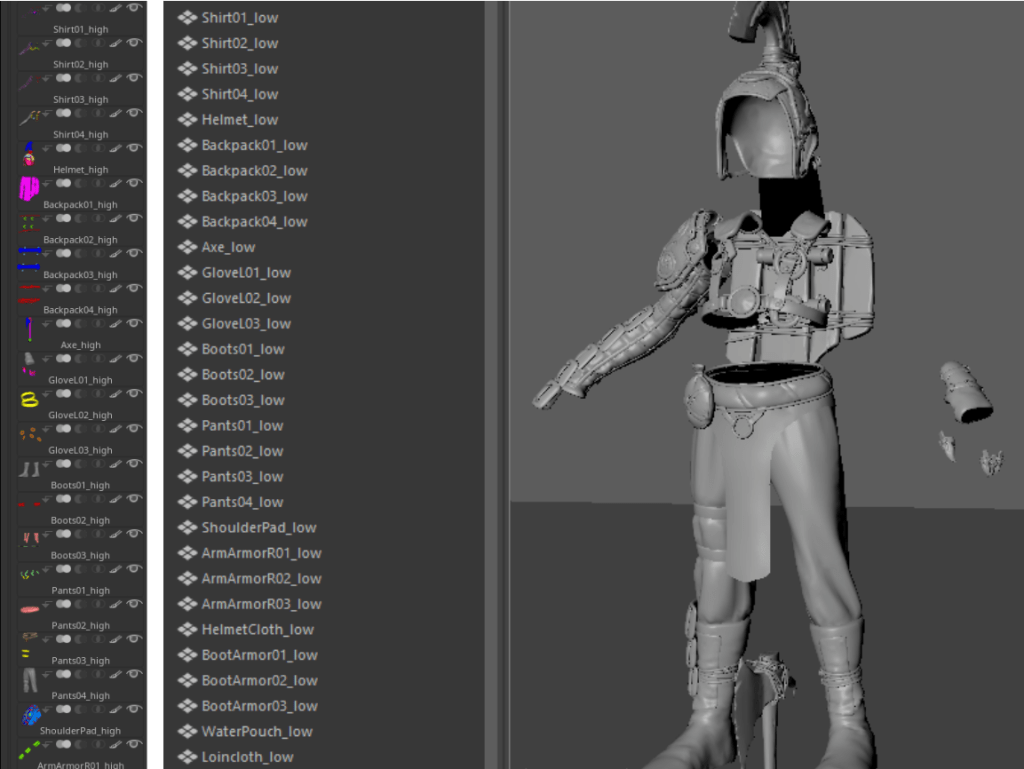

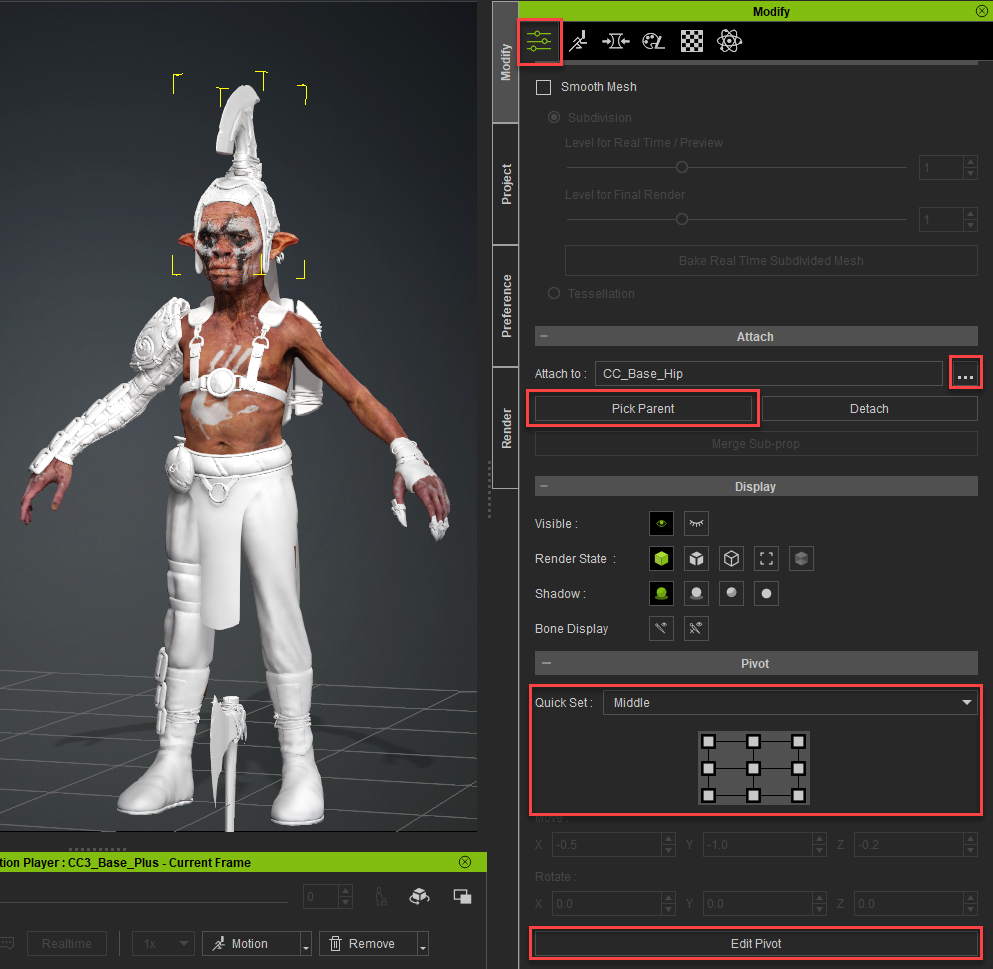

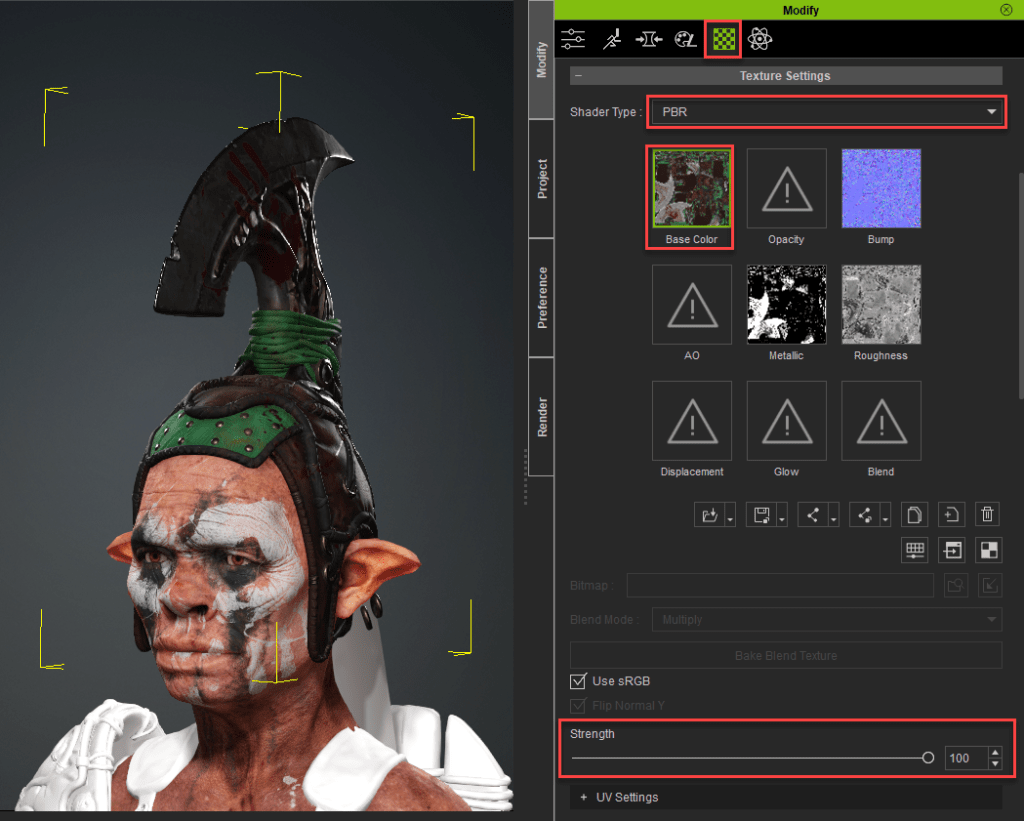

Character Creation: From Concept to Reality

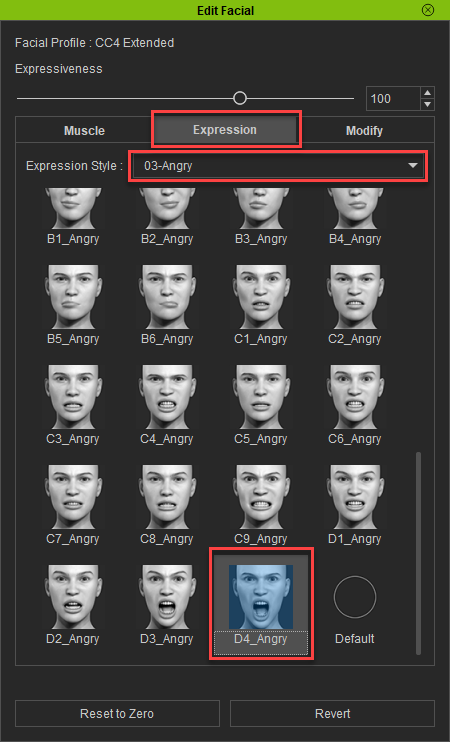

The journey of “KIHA” begins with the intricate design of its characters, meticulously crafted using the powerful tools within Character Creator. Here, Dom Fred finds a canvas to breathe life into his vision, ensuring that each character embodies the essence of the story with unparalleled detail and authenticity.

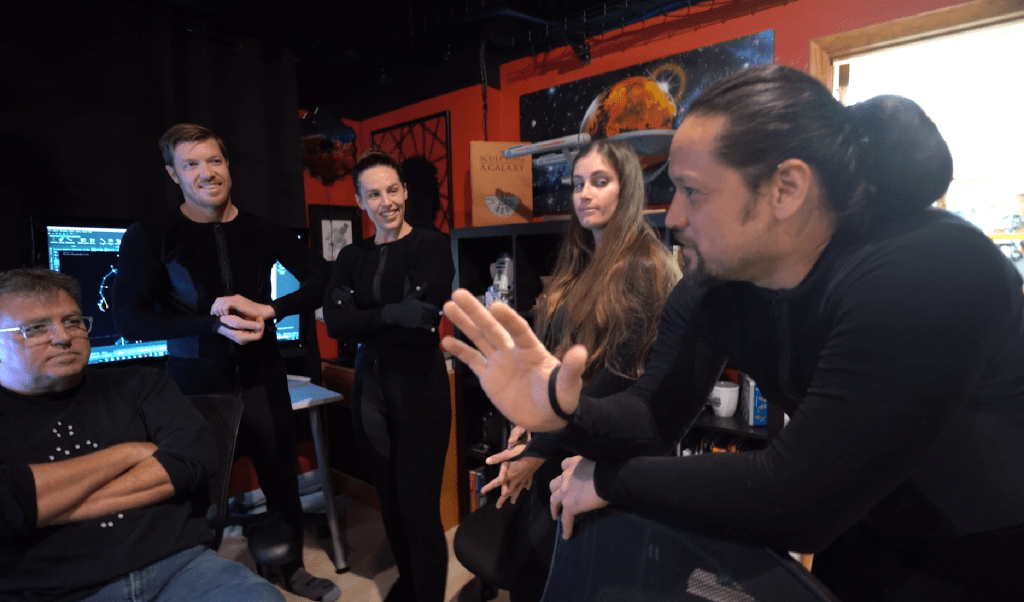

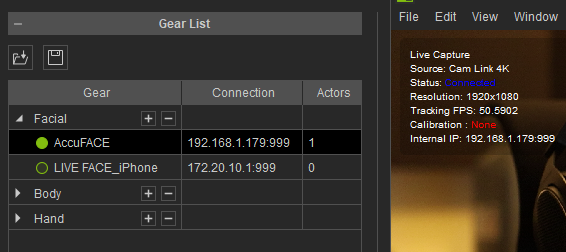

Motion Capture: Breathing Life into the Narrative

Enter ActorCore, a treasure trove of high quality 3D animations that infuse “KIHA” with a sense of realism and authenticity. With a diverse array of motion captures at his disposal, Dom Fred navigates the nuances of performance, seamlessly integrating them into the fabric of his narrative to evoke emotions and captivate audiences.

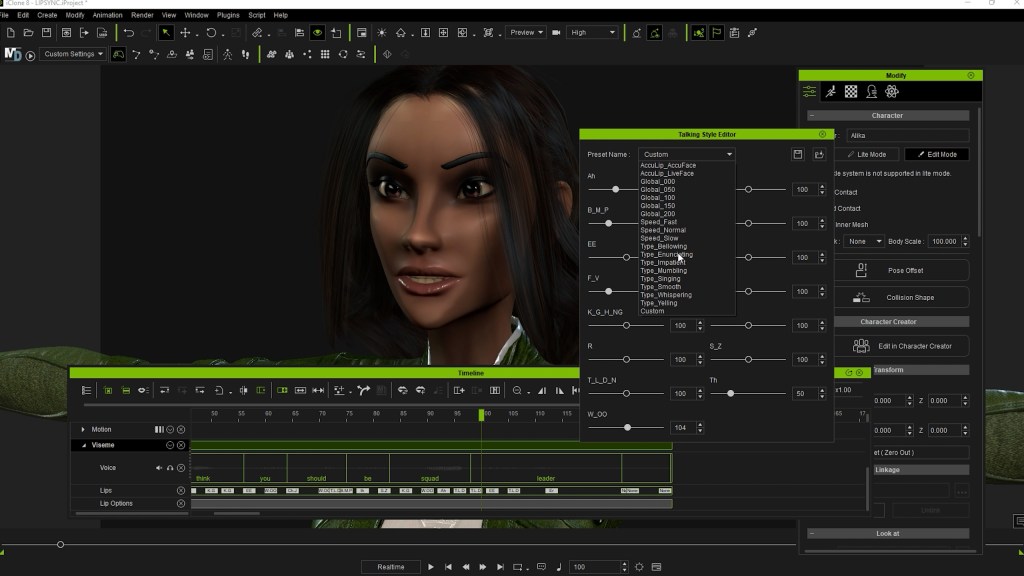

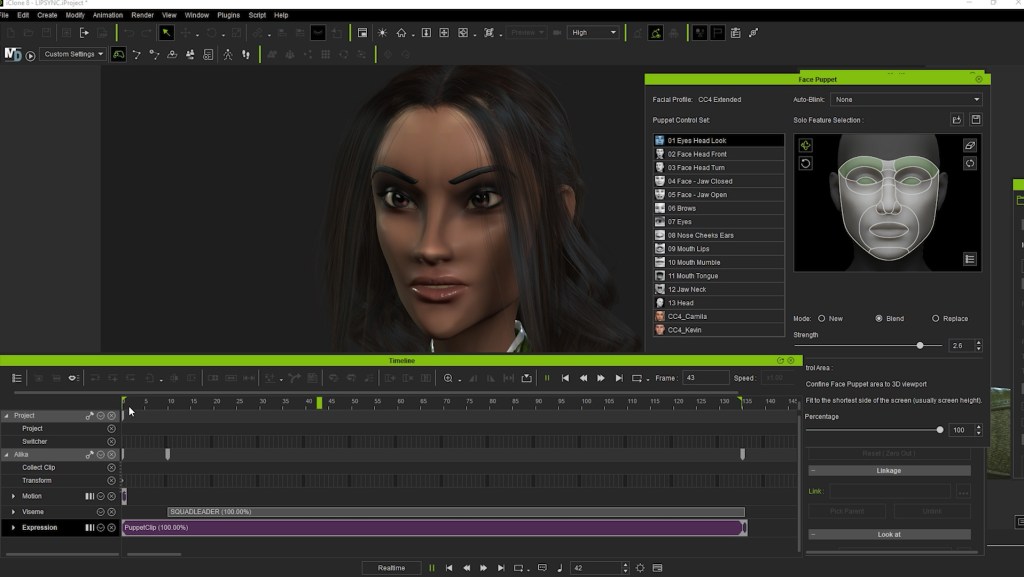

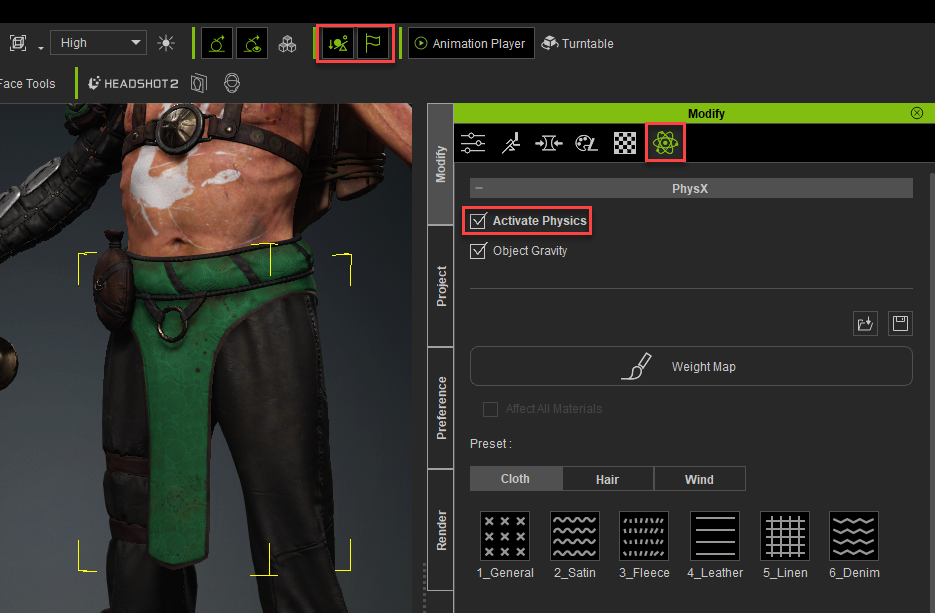

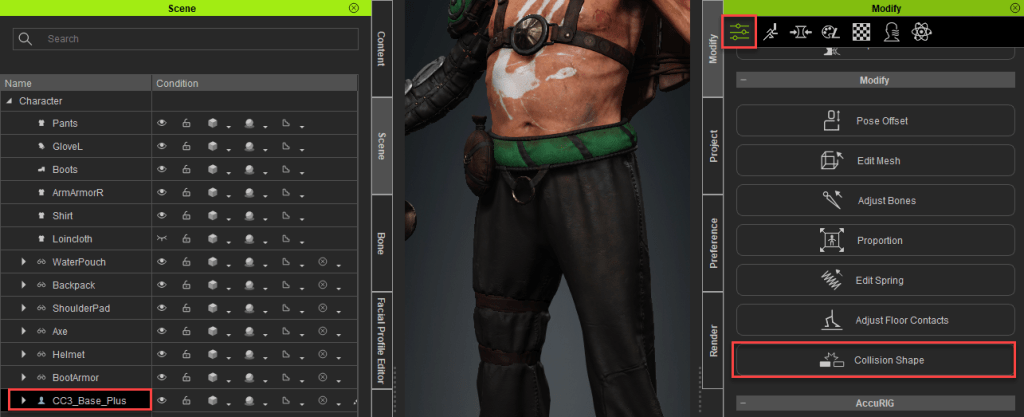

Mastering Animation: The Power of iClone 8

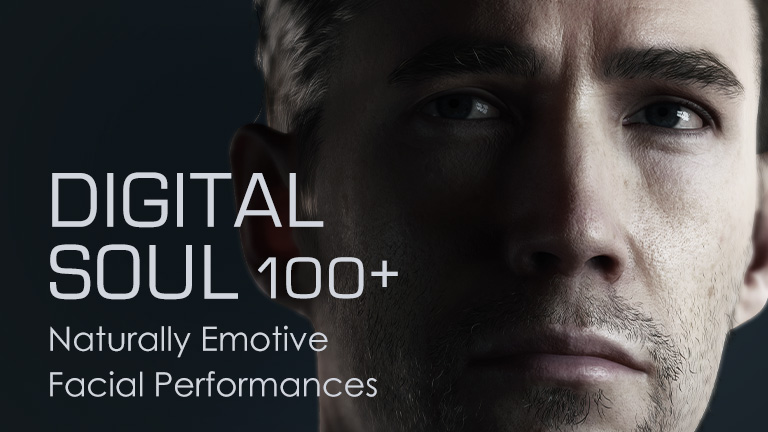

Central to the fluidity and technical precision of “KIHA” lies the formidable power of iClone. Dom Fred harnesses the powerful iClone 3D motion editing tools at his disposal to imbue his characters with lifelike movements and reactions, ensuring that every frame resonates with depth and emotion. With precise control over animation keys, Dom navigates the intricacies of action scenes with finesse, sculpting moments of tension and intrigue that keep audiences on the edge of their seats.

Real-Time Production: Bridging the Gap with Unreal Engine

As the narrative of “KIHA” unfolds, the seamless integration of iClone and Unreal Engine emerges as a pivotal asset in the director’s arsenal. Through iClone to Unreal LIVE LINK, Dom Fred navigates the dynamic landscape of real-time production, fine-tuning the placement of characters, settings, and camera movements with unparalleled precision. This synergy between iClone and Unreal Engine empowers the director to sculpt his vision with unrivaled flexibility, ensuring that every frame resonates with cinematic brilliance.

The Grand Finale: Lighting, Composition, and Rendering

As the pieces of “KIHA” fall into place, the stage is set for the grand finale on Unreal Engine. Here, Dom Fred meticulously orchestrates the composition, lighting, and rendering, elevating the visual splendor of his creation to new heights. With Adobe Premiere Pro 2024 serving as the final canvas for post-production, Dom Fred weaves together the threads of his narrative with precision and finesse, culminating in a cinematic masterpiece that transcends boundaries and captivates the imagination.

Conclusion: A Vision Transformed

In the realm of digital filmmaking, “KIHA” stands as a beacon of innovation and creativity, a testament to the limitless possibilities of technology and imagination. Through the collaborative synergy of iClone, Character Creator, and Unreal Engine, director Dom Fred breathes life into his vision, transforming dreams into reality with every frame. As “KIHA” takes flight, it invites audiences on a mesmerizing journey through the depths of the human experience, where the lines between reality and fantasy blur, and the essence of storytelling shines bright.

Follow Dom Fred:

LinkedIn:

https://www.linkedin.com/in/freddy-lounana-41015991/

https://www.linkedin.com/in/dom-fred-films-81904194/

YouTube:

https://www.youtube.com/channel/UCaEVZPzkWUsg9Wti9Cao4Vw

Facebook:

https://www.facebook.com/profile.php?id=100063571358642

Vimeo:

https://vimeo.com/823861782