Create true-to-life facial animations with a game-changing workflow that includes accurate lip-syncing, emotive expressions, muscle-based key editing, and a best-of-breed iPhone facial capture. Furthermore, a new set of scan-based ExPlus blend-shapes for iClone 7.9, gives unmatched, comprehensive facial performances.

Powerful Tools Come to the Aid

Whether humanizing realistic digital doubles or dramatizing stylized characters, iClone provides time-saving tools that can help anyone direct their 3D actors’ facial performances to maximize productivity. These tools and features come in the form of AccuLips, Face Puppet, ExpressionPlus (in combination with expression preset), Face Key Editor, and ARKit.

Automatic lip-syncing, which is provided by AccuLips, gives the most intuitive and effective way to generate procedural, editable talking animations, by allowing all types of users to easily create accurate, natural, and smooth-talking performances. Face Puppet lets you easily start with 23 predefined facial animation profiles that cover diverse personalities and emotions, with the addition of 7 ExpressionPlus profiles for subtle performances over eyes and mouth movements. All of these profiles allow an artist to animate facial parts like never before! In addition, one can effectively tone down the animation strength for realistic humans, or increase the strength for exaggerated stylized character expressions. It’s also possible to humanize characters with advanced facial behaviors like rolling the eyeballs while the eyes are closed, sucking in the lips, biting the lips, chewing with jaw movements, opening the jaw with lips sealed, poking the tongue in the mouth, licking the lips, and even simulating silent talking.

Once facial expressions are created through lip-syncing, facial mo-capping or puppeteering, one can fine-tune facial features by using the Face Key Editor. These facial keys will be overlaid and blended with existing expressions and stored in the Timeline track for further editing. Three types of expression adjustment are provided: Muscle panel, Expression presets, and morphs sliders. Within the Muscle panel, one or several effective regions can be selected and the user can mouse drag to drive the corresponding muscle movement along eyelids, eyes, brows, cheeks, mouth, lips, and nasal area. Muscle panel includes options for symmetry, head rotations, and adjustable sensitivity to accommodate subtle to drastic movements. Muscle panel also supports both Standard Mode and ExPlus mode (new in v7.9). Expression presets allow for quick jumping between different emotional presets, or access to ExPlus for 4 additional ARKit expression sets, including tongue movements, along with adjustable strength levels to match production requirements. 60 Standard and 63 (52+11) ExPlus blend-shapes can be directly accessed via morph sliders, which not only gives complete control over expression details but also allows for input of extrapolated strength values (+/- 200 ). This makes it easy to observe what blend-shapes are being triggered with expression presets and through the Muscle panel. Furthermore, blend-shape names and values can be inspected when exporting to external 3D tools.

iClone provides the most intuitive way to generate procedural, editable lip-syncing animations, allowing all types of effortlessly created, accurate, natural, and smooth-talking performances. The highly expressive, scan-based ExPlus blend-shapes are now one-to-one mapped to iPhone ARKit signals, making the data ready for post-layer editing via the iClone Face Puppet and Face Key Editor.

Lip-syncing that Works

AccuLips is a brand new lip-sync technology that allows characters to achieve smoother and accurate lip-sync results. This technology can save a massive amount of time when animating dialogue scenes, because AccuLips detects and extracts text and viseme from audio, or can be made to import prepared scripts for precise lip-syncing. Natural speak is achieved with the co-articulation design, and with further fine-tuning of every word’s viseme and strength level. AccuLips contains an English dictionary with 200,000 default words, that are easily customized by adding new words, or making changes to the existing entries.

The new iClone AccuLips system can automatically generate accurate text data and correct viseme timing from an imported voice. This new design gives users a highly manageable approach to professional lip-sync animation. This new method lets you generate text from audio, while automatically aligning words to their corresponding voice sections. If the system is further aided with a text script, then one can get a more accurate text-to-voice alignment.

With enhanced mouth shape for each viseme, the lip-sync result is more precise and natural for both realistic and stylized characters because AccuLips is designed to simulate real human speech behavior. For true-to-life talking animations, each mouth shape takes on the qualities of other mouth shapes that precede or follow them. i.e., articulators either anticipate the next sound or carryover qualities from the prior sound. “Schwa(/ə/)” is the weak vowel sound in some syllables that is not emphasized in words. AccuLips also include slightly opened mouth shapes when there’s a break between words and sentences and mouth movements are governed by pairing 8 lip/dental shapes, 7 tongue positions, and mouth widths; all blended with varied time curves to represent more natural transitions between a total of 240 possible phoneme combinations. When we pronounce words, the preceding phoneme will be affected by the following phoneme, therefore the viseme of the preceding phoneme may be different, like “key”, “cool” and “car”. The same treatment is applied to succeeding phonemes where they are also affected by the preceding phonemes, like “chips”, “kick” and “lick”.

Although it’s good to see all the detected visemes correctly align to each word, highly frequent lips and jaw movements can cause unwanted mechanical behavior. The smart down-sampling tool can effectively alleviate the judders by reducing less prominent lip keys, just like how humans tend to slur in a effortless manner. Phoneme pair design is the foundation of iClone’s lip-sync system, and the 7.9 update takes out abrupt transitions between certain viseme pairings, and optimizes transition curves for smoother lips blends, especially when talking fast. The strength of lips and jaw movement varies due to when and how people speak. It can be influenced by speed or situation like whispering is different from yelling, and mumbling is different from enunciating. The Talking Style presets give you the quick setup over lips, jaw, and viseme strengths optimized for different talking occasions. iClone lip-sync technology realizes the character lip-sync animation with an easy and intuitive process. The system will align the visemes of phonemes to what the character says, and allow users to further fine-tune.

There are several flexible ways to import voice for characters, and they include the following:

- Record the voice directly in iClone, so that the voice clip will immediately show.

- Use text-to-speech to have the voice generated according to what is typed.

- Import an audio file, so that the system can generate their corresponding visemes in the timeline.

When importing voice files, the system will automatically generate a voice track with corresponding timeline visemes, and with AccuLips there will also be a text track. Users can intuitively adjust the voice and animation, and the selected viseme with the Lips Editor. Use Lip Options to adjust the Smooth Options and Clip Strength of a selected clip. One can also separately fine-tune smooth level for lips, tongue, and jaw.

iPhone Face Mo-cap

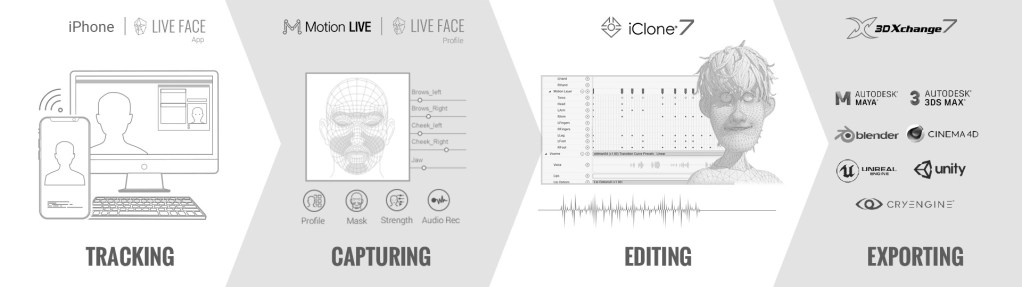

iPhone’s facial motion capture works with iClone Motion LIVE by using the exclusive TrueDepth camera system powered by sophisticated high-res sensors. The iPhone tracks face with a depth map and analyze subtle muscle movements for live character animation. The Reallusion LIVE FACE app enables the iPhone to live-stream captured facial mo-cap data directly to a PC or Mac, transforming the iPhone into a powerful 3D bio-metric mo-cap camera.

The TrueDepth Camera projects and analyzes more than 30,000 invisible dots to create a precise depth map of the face, and analyzes more than 50 different muscle movements to mirror expressions. Furthermore, it is highly sensitive to details, like rotating eyeballs and tongues; works with different skin colors and extreme light conditions. Runtime calibration offers on-the-fly calibration without pausing the face tracking process, with support for WiFi, USB, and Ethernet connection. Users are recommended to use an Ethernet connection in order to eliminate all potential lag for the optimal facial mo-cap performance.

Designed by animators, for animators – Reallusion’s latest facial mo-cap tools go further, addressing core issues in the facial mo-cap pipeline to produce better animation. Starting from all new ARKit expressions, to easily adjusting raw mo-cap data as well as re-targeting, to multi-pass facial recording and essential mo-cap cleanup – we’re covering all the bases to provide users with the most powerful, flexible, and user-friendly facial mo-cap approach yet. The biggest issue with all live mo-cap is that it’s noisy, twitchy, and difficult to work with, so smoothing the live data in real-time is a big necessity; because if the mocap data isn’t right, or the model’s expressions aren’t what you want, and the current mo-cap recording isn’t quite accurate then you need to have access to the back-end tools to make adjustments. Finally, while you can smooth the data in real-time from the start, you can also clean up existing recorded clips using proven smoothing methods which can be applied at the push of a button.