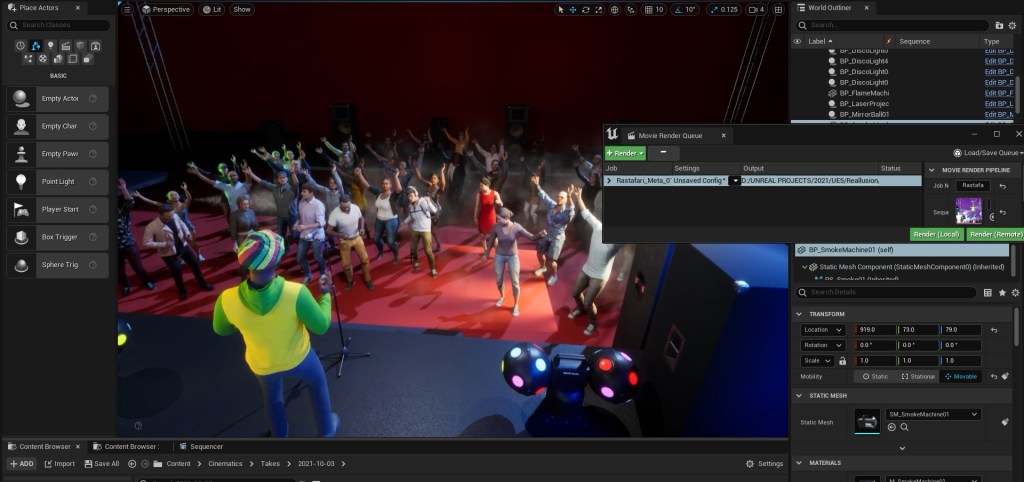

Reallusion’s 2021 iClone Lip Sync Animation Contest launched in July 2021 with the aim of delivering the best 30-second talking animations that showcase lip-sync, facial animation, and great characters. Within 3 months, there were 468 submissions documented among 60 countries. Contestants were inspired by comedy, movies, music videos, storytelling and remaking them into detailed talking animation performances. Besides using iClone and Character Creator software, the contest also welcomed diverse entries using different renders and engines including Blender, Cinema4D, Unreal, Unity, and more.

The final result exemplified iClone’s game-changing facial animation workflow for accurate voice lip-sync, puppet emotive expressions, muscle-based face key editing, and professional iPhone facial data capturing. A third of submissions using Blender and MetaHuman demonstrated that iClone is a highly compatible facial animation pipeline tool used by Unreal MetaHuman and Blender users, along with Replica’s AI-voice plugin that made talking animations easier than ever.

“At Reallusion we are proud to see an influx of high-level artists that are adopting Character Creator and iClone into their pipelines. The contest proved the viability of using iClone’s powerful lip-syncing and facial animation tools in professional production, when time and budget are of the essence. We congratulate all who participated, and look forward to bringing more innovations to the industry.”

– Enoc Burgos, Reallusion Director of Partnership Marketing

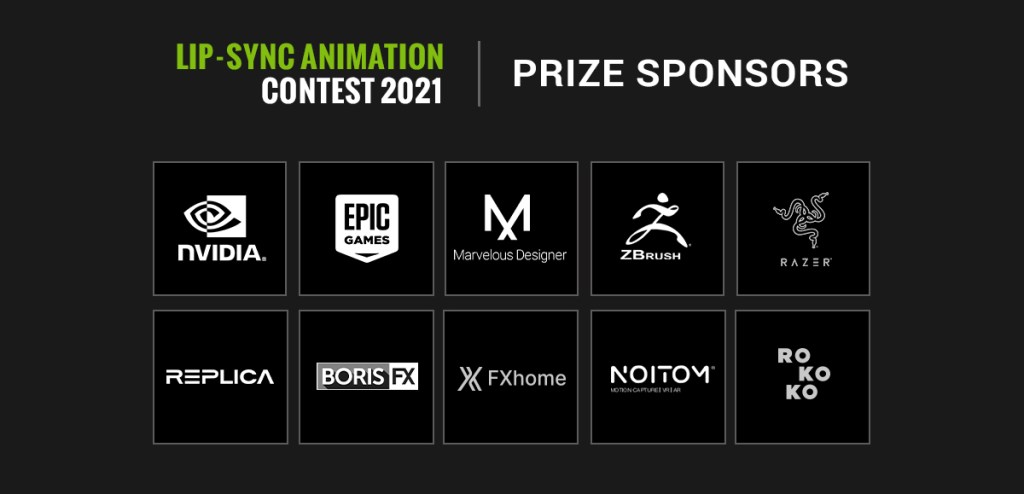

The 2021 iClone Lip Sync Animation Contest was hosted by Reallusion and sponsored by industry leaders including; NVIDIA, Epic Games, Noitom, Rokoko, Replica Studios, Pixologic, CLO Virtual Fashion Inc, FXHome, Boris FX, and Razer. Through this contest, iClone and Character Creator prove to be one of the most powerful facial and body animation pipelines for all animators.

Watch Overview Video:

WINNERS

The iClone Lip-Sync Animation Contest 2021 offered cash and prizes valued at over USD $40,000 thanks to A-list sponsors that partnered with Reallusion.

BEST CHARACTER DESIGN CATEGORY

1st Place: Ice Queen

By Loic Bramoulle, Cinematics for indies and AA studios. Render: Blender

“I used an iPhone XR for capturing a base performance, while simultaneously speaking over the audio clip, and video capturing my face, which allowed me to manually polish the expressions more easily while aiming for hand-animated, keyframed facial animations. The process was pleasingly fast, as the motion capture was essentially used as an animation blocking, but without all the manual work. Polishing it by hand wasn’t as fast, but it seems much more efficient overall than animating from scratch.” – Loic Bramoulle

2nd Place: Do Right, Jessica Rabbit

By Jasper Hesseling, Story loving, professional 3D Artist. Render: iClone

“I had a blast working on this piece with the lip-sync tools in iClone. I have still much to discover but I already learned a lot.” – Jasper Hesseling

3rd Place: Love, Rosie

By Petar Puljiz, Unreal 3D Artist. Render: Unreal

“Thank Heaven for these animation tools, which you need to have in your pipeline, no matter if you are a professional or a beginner.” – Petar Puljiz

BEST CHARACTER DESIGN CATEGORY

Characters that showcased exceptional character design, character-setting, and voice matching.

1st Place: The Pumpkin Cave

By Varuna Darensbourg, Artist & Game Developer. Render: Unreal

“Using Reallusion’s tools to breathe life into my characters has been a thrilling experience. They can save time, which is a huge boon, but they work so well that it makes daunting tasks enjoyable. Using iClone AccuLips for the 2021 contest was incredible! The accuracy blew my mind and combining it with iClone Motion LIVE and the Live Face iPhone app for layering allowed me to do far more than I expected.” – Varuna Darensbourg

2nd Place: Judar

By Gergana Hristova, Freelance 3D Artist. Render: iClone

“I never knew that animating in 3D will turn out to be more enjoyable and quick, thanks to Iclone!” – Gergana Hristova

3rd Place: Animal Farm

By Jason Taylor, Videographer and Director. Render: Unity

“iClone has been a game-changer for me. I love the ease of use for lip-syncing and the ability to fine-tune facial animations using facial keys and facial mocap. Amazing stuff. Thank you.” – Jason Taylor

SPECIAL AWARDS & HONORABLE MENTIONS

The judging process for the Lip-Sync Animation Contest 2021 proved to be quite difficult and took many hours of deliberation. Besides the top 3 placements from Best Character Animation & Best Character Design, Reallusion also selected 41 winners for special awards and honourable mentions. See the Winner Page for winner details and showcases.